Dissecting a Research Paper on Radar Security in Ultra-Wideband Sensors

How Safe are Radar Sensors from Adversarial Attack?

This post provides a review of research on radar security and how vulnerable a particular type of radar is to adversarial attack. I have detailed my take on the research and findings, as well as what we learn from the research on improving security within radar-based environments.

This paper is titled Adversarial Attack on Radar-based Environment Perception Systems. I previously had familiarity with traditional radar-based systems in or related to vehicles, such as police speed radar, parking sensors, and cruise control sensors. However, the papers’ brief describes the focus of this research on adversarial attacks against ultra-wideband (UWB) radar sensors. I wasn’t entirely familiar with this particular application since ultra-wideband (UWB) covers a broad range of electro-magnetic frequencies and practical applications.

What is Ultra-Wideband and How Does it Work in Radar Perception Systems?

As a background to this paper, I wanted to add some basic context about ultra-wideband that was not clearly defined in the research paper.

The FCC considers UWB frequencies to be in the 3.1 to 10.6 Ghz range, with channel widths ranging from 500Mhz to 7Ghz. These frequencies are primarily for communications rather than radar perception, but the spectrum generally appears to overlap. UWB communications may be used in satellite communications, 5G UW cellular, vehicle-to-vehicle comms, or vehicle-to-ground comms. In this case, its use is environmental sensors such as automotive radar cruise, parking assist, and self-driving AI.

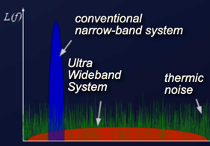

The figure below gives a rough idea of the difference between conventional narrow-band radar systems and UWB. The red bubble shows a much broader bandwidth with significantly reduced power in comparison. Pulses are also sent in very short bursts, of approximately 1ns.

The general idea is that a radar perception system sends out pulses and captures where, when, and which orientation the reflection of those pulses are received. Given the high frequency and broad bandwidth of UWB, there is a lot more data to process than traditional radar systems, so the equipment must be very sensitive and precise.

Similarly, the logic behind processing these signals requires powerful hardware and software.

What the Research Delivered: Adversarial Training to Block Attackers

The paper focuses on the machine learning (ML) aspect of processing these signals to formulate an attack. As signals are sent, reflected, and received, the software/ML identifies and “classifies” various signatures in the signal into real-world objects.

The goal of the adversarial attacks is to fool the ML with signature-based jamming, such that the ML would fail to properly classify an object. From the perspective of the perception system, doing so would mean a real-world object would not be detected.

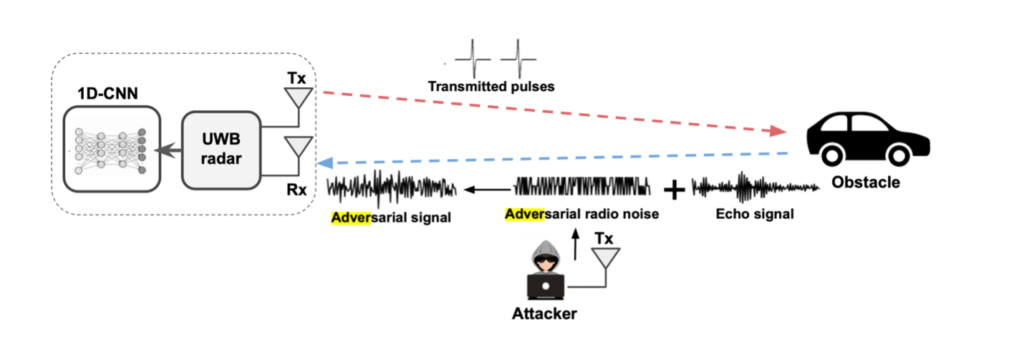

The following shows a high-level threat model of the adversarial attacks:

The paper proposes an input-agnostic, or universal adversarial perturbation (UAP), as the basis of a signal, called a “patch”. Implementation of a UAP is an important part of the research paper – the authors explicitly say that a “reactive” attack (one that is adjusted and timed perfectly for a given victim signal) would be nearly impossible due to the complexity of the signal and the hardware necessary to process the signal, let alone transmit it accurately.

It’s also important to recognize that the attacks in this paper are all performed in well-controlled lab situations, with predictable signals being sent from the victim at a fixed location.

The universal attack, also known as Adversarial Radio Noise Attack (a-RNA), is broken down into several components where attack and defense strategies are noted. After developing a UAP to target a particular object signature, the next challenge for the attacker is to time the patch to perfectly overlap the reflected victim echo signal. Any signal that doesn’t overlap with the patch results is effectively neutralized.

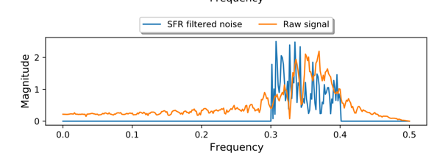

The next challenge is to limit the frequency spectrum to match the type of signal sent by a particular sender. In this way, strategies for shift (timing) and filter (frequency/signal characteristics) resistance patches are discussed. An example of SFR patches is shown below:

Lastly, attackers may have to avoid spectrum sensing, whereby a sender listens to activate environmental signals to detect adversarial attacks before transmitting/receiving their own signals. The primary solution for avoiding spectrum sensing is reactive jamming, where adversarial patches are only transmitted when a sender is transmitting/receiving.

In summary, the paper proposes a set of techniques for creating SFR patches called “Adversarial Training”, whereby the patches are progressively shifted and filtered to match the characteristics of the sender’s signals most accurately.

What I Expected to Learn: What Are the Real-World Consequences of Attacks on Radar Systems?

I expected this research would talk not only about the attacks, but some of the real-world systems they were targeting and the consequences of compromising those systems. In vehicles for instance, radar-based cruise control, parking assist, and semi-autonomous driving is crucial to the vehicles’ perception of the road and surrounding environment. Any type of radar jamming that could successfully defeat these perceptions could have severe consequences to life and property without sufficient hardening and safeguards to those systems.

Additionally, from a legal perspective, any type of radar jamming comes with severe criminal penalties in the U.S., Canada, and EU.

Though the paper introduces attacks that have serious consequences against UWB-radar systems in a lab, the conditions are well-controlled. The algorithms appear to rely upon a large number of events to heuristically tune a more accurate attack for a particular sender. Their effectiveness is modeled in probabilities over large sample sizes in controlled circumstances. In the real world, the sample sizes are likely to be much smaller, reducing the likelihood of success.

Given the weak strength of the signals, the paper mentions the effectiveness in the real world is also limited to line-of-sight (Los) at close range. In the real world, Los would prove to be difficult for stationary attackers but may be feasible for moving ones like many vehicles in traffic.

Lastly, a discussion of the consequences of a successful attack would have been useful. A concrete example proving that a sender, such as a vehicle, failed to detect a significant object, such as a person, bicycle, animal, other vehicle, etc. would be more powerful than statistical analysis of the likelihood of success. One challenge of the attacks is that while attackers may know the inner workings of the ML algorithm based on UWB signals, they do not have access to the victim’s device to know whether the attack has been successful.

Takeaways About Radio Security from this Research

Without question, adversarial attacks against UWB-radar perception systems deserve serious attention from the manufacturers who implement them to ensure proper defensive strategies are employed. At a basic level, shifting, filtering, and spectrum sensing should be implemented. However as demonstrated, strategies for shift avoidance, filter avoidance, and theoretical strategies for spectrum sensing avoidance are possible.

While these attacks are less likely to occur in the real world, further research needs to be conducted to develop hardened defense strategies against reactive and a-RNA radar perception system attacks.

Back

Back